The Reuse Layer: Governing AI as a Capability, Not a Credential

Stop wiring every app to every vendor. Put all model or tool power behind one safe doorway, so teams reuse instead of rebuild.

What is a “Capability Pool”?

It’s a shared, governed toolbox for your company’s AI work.

Instead of each app holding its own keys and prompts, the pool hides the keys, standardizes the contracts, and tracks cost and quality.

Apps call one gateway; the platform takes care of routing, quotas, bills, and safety.

For example:

curl -X POST http://localhost:8080/v1/run -H "Content-Type: application/json"

-d '{"task":"report.generate",

"input":{"period":"2025-08",

"sourceIds":["sp:/dept/2025-08.xlsx"]},

"policy":"finance",

"tags":{"dept":"Ops"}

}'

Why should we care?

- Ship faster: integrate once, reuse everywhere.

- Control cost and risk: one place to see usage, cost, and who did what.

- Change safely: evaluation tests and human sampling mean upgrades aren’t gambles.

What’s inside the pool?

- Models and compute: LLMs, embeddings, batch inference queues.

- Tools and functions: search, DB access, external APIs, internal actions.

- Knowledge: RAG indexes, curated corpora, permission-aware views.

- Policies: routing rules, quotas, redaction, data residency, risk controls.

What makes it different?

| Not this… | But this… |

|---|---|

| Shared key vault | Stable contracts (Prompt/Tool) that hide raw keys |

| Service catalog only | Orchestration: route, limit, audit, settle cost |

| Offline model registry | Runtime governance with tests, sampling, rollback |

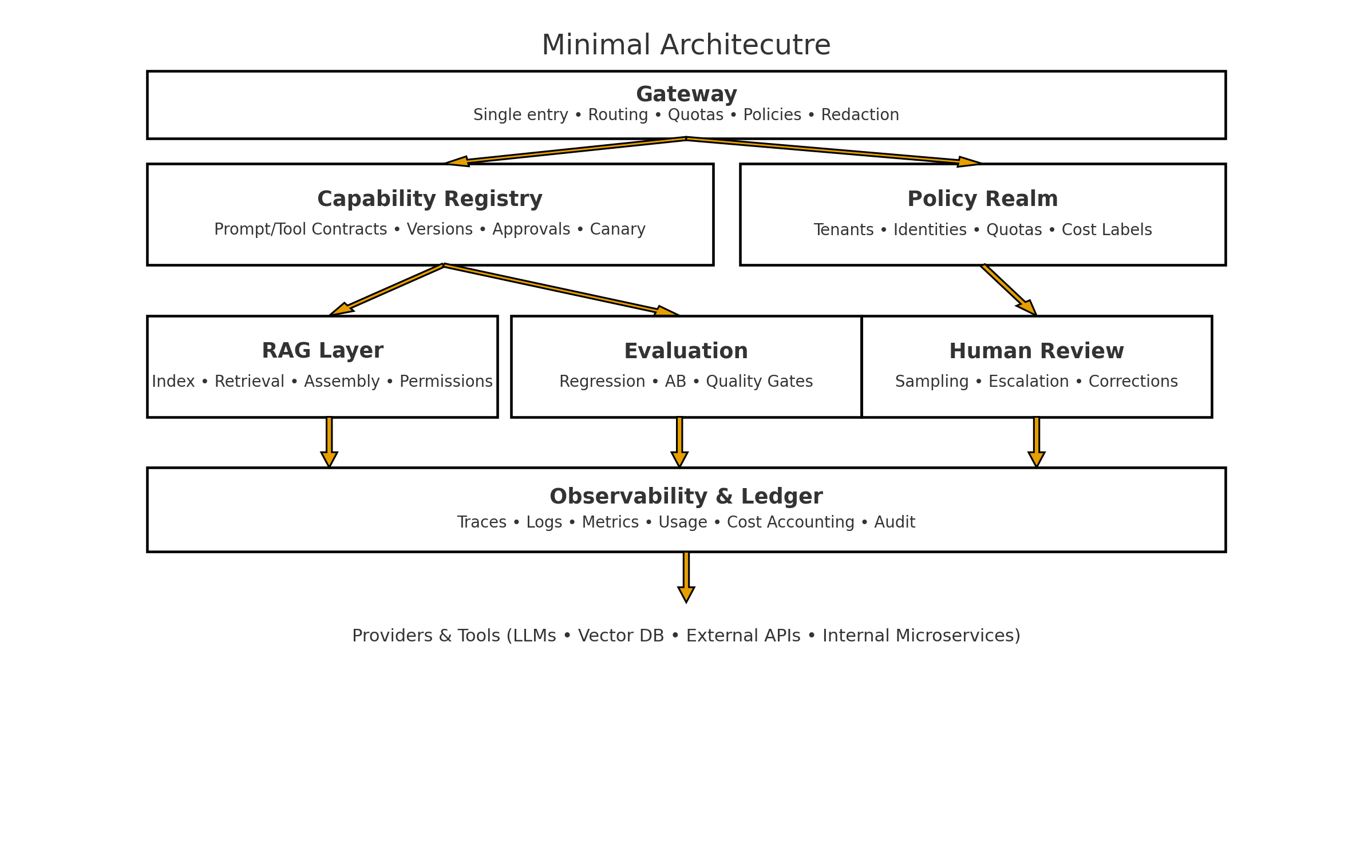

Minimal architecture

- Gateway: single entry; routing, quotas, policies, redaction.

- Capability Registry: Prompt/Tool contracts, versions, approvals, canary.

- RAG Layer: index and retrieval wired to permissions.

- Evaluation: regression/AB checks before rollouts.

- Human Review: smart sampling and escalation when needed.

- Observability and Ledger: traces, logs, metrics, usage and cost.

How do apps talk to the pool?

Inputs: task (what to do), input (data), policy (rules), tags (for cost/ownership)

Outputs: result and a trace with usage, cost, route, eval status, and review status.

Example trace (conceptual):

{

"traceId": "9e1a...",

"contract": "prompt:report.generate@v2",

"route": "gptx:2025-08-canary",

"quotaUsed": {"tokens": 18342},

"cost": {"currency": "USD", "amount": 1.92, "tags": {"dept":"Ops"}},

"evals": {"suite":"regression-v5","status":"pass"},

"humanReview": {"sampled": true, "status": "approved"}

}

What are our KPIs?

- Reuse rate: % of capabilities used by 2+ apps

- Blast radius: apps affected per prompt/model change (lower is better)

- Time‑to‑availability: request to usable in prod (median)

- Cost visibility: % spend with app/team/use‑case attribution

- Compliance coverage: audit completeness; human sampling hit rate